Futurist Stewart Brand once said “Information wants to be free” and it has resonated for decades. While I like the sound of that, we need to be careful about not putting “content” into the same box as “information.” It’s vital that information is free and available to people in an open society. As the Freedom of Information Act has shown, we need exposure to information that could show how the government, businesses, and other officials act when they aren’t in the public view. Transparency is important, but so is the information we need to be aware, healthy, happy, and safe. Thus, public information about these matters should be accessible to all people whenever possible. I believe this is the spirit of what Brand meant.

That said, “content” isn’t just more information. Up until recently, we knew that humans had put work into framing, presenting, adding context, and possibly even generating the information presented as “content” — whether that is an article in a newspaper about an escaped zebra, a painting of two people with bags over their heads kissing, a song about losing the love of your life to a man riding a Vespa, or even just the best Tweet people have heard all day. If we simply equate ‘content’ with ‘information’ or even ‘data’, as some are wont to do more frequently in our modern Age of AI, you may believe it wants to be free.

As a content creator for over thirty years with books, video games, board games, videos, and hundreds of articles out in the public view, I can tell you that even if you feel content wants to be free, it definitely doesn’t want to be exploited.

Content is Information with Extra Value

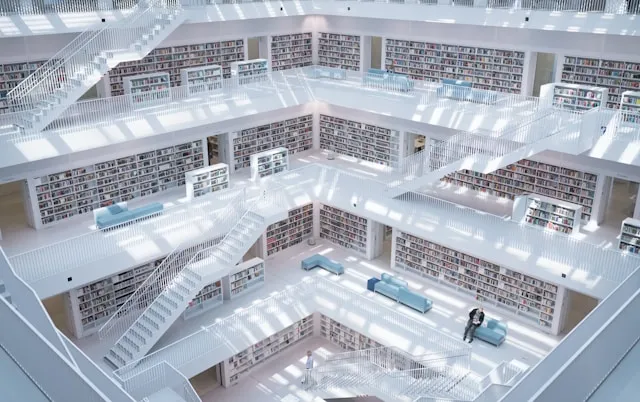

While making great content freely available for people to experience is the right thing to do (e.g., libraries and museums), it’s quite a different situation to assume businesses should have free access to all content so they can make money by Frankensteining together ‘new’ content from the bones, flesh, and sinew of creative content crafted by human beings.

Even the tools human beings use to make content get no respect from tech right now. The most art-friendly of the tech companies, Apple, just released the horror show ad below to promote their new iPad. I never met the man but I’ve read enough about Steve Jobs to think he would not have approved of “Crush!”, Apple’s grim new spot.

https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2FntjkwIXWtrc%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fwatch%3Fv%3DntjkwIXWtrc&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2FntjkwIXWtrc%2Fhqdefault.jpg&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=youtubeEt tu, Apple?

Yet, this is where we are with AI. Big AI is making use of content created by people (Human-Created Content, if you will) to offer their customers the chance to produce new content built on the extracted value of work they did not pay to use. Simply put, if the originator of said content isn’t being paid, their work and they themselves are being exploited.

This doesn’t mean the AI building something from a Large Language Model (LLM) is creating something of actual value. They may be asking an AI to produce an image of Jennifer Aniston as Joan of Arc or Frida Kahlo. This may not provide any value beyond the person prompting to get this work looking at it and smiling briefly. But they extracted value from other works to get that moment of (very) mild amusement.

Let me put my cards on the table: I don’t believe that this should happen without consent from the folks whose work enabled the system to produce that piece of content. Whether it is throwaway garbage or something the prompter plans to print on t-shirts for some side income, value was provided and only the tech folks who made it possible get to make money. The creatives are usually left out of the transaction.

Artists, actors, writers, and creative folks in general should be concerned if they cannot control their own content in this situation. Of course, Copyright protects creators from being copied directly in the marketplace. It’s not perfect, as some countries like China don’t respect Intellectual Property laws at all, but it does provide for protection against direct copying. We simply need to include programmatic copying as well because that is what is happening with AI tools.

The Black Box Excuse

Most technologists have become apologists for AI when it comes to crediting and compensating the creators whose work is being used in their models. “AI is a ‘black-box solution’ so we don’t know what all went into that content. That’s because deep learning tools provide no transparency into the way existing content in the LLMs training data is being processed into new content at the request of a prompt.

When asked about the content they use for training, we get responses that range from confused to secretive. Big AI doesn’t really want you to know what content was used or how much they used, but they need more. This was painfully obvious when the CTO of OpenAI was interviewed about their Sora video model by the Wall Street Journal. She ran the gamut of the obfuscation tactics. First, there was an attempt to state that training was done on “Publicly Available” content, somehow thinking that this is like “Public Domain.” Need we clarify that Public Domain content is out of copyright and therefore available for free use? That isn’t anything like “Publicly Available” which is, depending on how you interpret it, almost everything ever. She eventually just said she wouldn’t talk about what they use for training. Big surprise — they don’t want to discuss how they’re using other peoples’ content.

https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2FmAUpxN-EIgU%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fwatch%3Fv%3DmAUpxN-EIgU&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2FmAUpxN-EIgU%2Fhqdefault.jpg&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=youtubeTraining on Everything Like They’re Union Pacific…

Copyright laws provide protection, but it also assumes that people and businesses alike are going to honor the concept in a meaningful way. Once your work goes into the Public Domain, all bets are off. One need look no further than the speed with which someone made a splatter-fest horror film with Winnie the Pooh as a killer just as soon as the copyright lapsed on A.A. Milne’s charming bear. We can only imagine what awaits the Steamboat Willie version of Mickey Mouse, which was already exploited nastily by John Oliver in a rare moment of unfunny schtick. (Full disclosure: I worked at Disney for seven years, so I am fond of that mouse).

Public Domain content is viable training data and using it for the purpose of compiling ‘new’ content from a LLM is acceptable. While one could argue that using only the collected works up to the 1920s means missing out on rather a lot of useful material, I’d argue the bigger deal is that you need to balance the inherent bias in a lot of that content. Yet, just dropping everything you can possibly find online into the same bucket isn’t going to solve any of the problems with Content Sourcing.

Quite the reverse, that’s become a huge problem for companies using AI, including Amazon’s Alexa app saying the 2020 election was stolen because of all the discredited work that had been in its training set. Amazon acted quickly to address the situation, but this just speaks to the peril of consuming whatever content you can get your crawlers on.

Google’s ham-fisted effort to make their Gemini image generation more inclusive took a bad turn when requests for pictures of German soldiers from 1943 led to black and female Asian nazis. Alphabet’s stock paid a heavy price ($90B to be exact) for that clear indication that they had a lot of catching up to do.

Regardless, Public Domain content is a far cry from “Publicly Available,” which means you’re welcome to consume it and, if you take it into your own head for ‘processing’, that work can influence the content you create. You cannot, however, spit out the same work and claim it as your own or you’ll be in violation of the copyright, subject to legal action and, possibly, ridicule. That’s not what Picasso meant when he said that “great artists steal.”

More to the point of this article, the scale at which this is done by an AI versus an individual should not matter — it’s still theft.

Stealing at Scale

Did the LitRPG fantasy novel you had ChatGPT write for you steal mostly from Robert Jordan, Anne McCaffrey, and whomever wrote the He Who Fights With Monsters series? Is it 17% Robert Aspirin, 3% Poul Anderson, 9% Marion Zimmer Bradley, 11% Fritz Leiber, but then a whole 25% R.A. Salvatore? Because the algorithm behind AI tools had some reason to use the influence of the work it has devoured in its ‘training process.’

Somehow in their black box world, your AI tool pulled various elements from the novels on which it has trained without author approval, but also based on the language in the prompt, plus what might have been set in the profile of the user, as well as what the AI’s been able to glean based on past usage. Even more notably, the AI tool may also be using some insight based on the feedback it has had when other CreAItors (cree-AI-tors, let’s say) asked the same model to create a similar story.

Once again, if the works of those writers of fantasy novels were used for training ChatGPT, they are all owed some money from the AI tools that are now making money using their work to craft ‘new’ work for their users. How do we sort out what specifically was used? That’s not the issue. The issue is that all of the creators whose work was used should be paid for their work regularly since it was used for training.

Mystery Meat Content

Not so long ago, there was a lot of online discussion about ‘pink slime’ food additives being used to make ‘burgers’ for certain restaurants, including one run by some clown. In the same way, ‘content additives’ seep into your work without too much clarity on how or why they are affecting your output.

Since we don’t know the methods used by these models, we can assume they add a healthy dose of whatever else the AI model believes will be useful in predicting how to craft this kind of story or piece of art or song based on information from analogous situations. In fact, some of these connections are made in ways that are not remotely related to the way humans would expect, in just the way AlphaGo beat human opponents using strategies that were not expected by expert Go players.

Thus, your exploited content might even be used for things that aren’t remotely related to what you wrote about. Perhaps some shiny metadata associated with it made an AI believe your work is relevant for reasons that don’t help you win a board game like Go but also don’t make any sense at all in the human world.

Sometimes we see AI tools make these kinds of connections and they seem…dumb. I laugh when LinkedIn’s algorithm keeps sending me adverts for Crescent — a bank that has a comparable color scheme and logo to my company, Credtent. The AI assumes it’s a good idea to put us together over and over again, perhaps calculating that those similarities will be a bridge for us. To me, it’s just a solid example of artificial idiocy looking for connections it doesn’t know how to make.

However much you may admire the output from the AI you used to create a short story, song, or other piece of art, don’t let AI apologists encourage you to think it’s the same thing as you processing the work of other creatives yourself into your own work of art. That took your brain power, your time, and your humanity to synthesize the work into something uniquely your own. That’s what Picasso meant; steal from the greats and make your own work. He definitely didn’t envision an AI doing the same thing at a comically huge scale for anyone who can even get yet another AI to write a prompt for them.

Humans combine other works into their own content with creativity and critical thinking. AI uses an algorithm and, yes, that does matter.

Fair Use to the Rescue? No.

What about Fair Use? That’s the legal concept that allowed Mad Magazine to write parodies of media properties for decades and pretty much enabled the entire career of Weird Al Yankovic. Will crafting some kind of argument around the way AI slices and dices existing content into ‘new work’ fulfill the tenets of Fair Use? Probably not.

The Supreme Court has already ruled in a case that gives us a clear view of how they believe the work of artists needs to be respected when someone takes their work and makes something derivative of it for commercial purposes. Basically, they said no to this Fair Use defense. I’ll leave it to the lawyers to argue this point, but as someone who spent years explaining how Fair Use allowed one of my previous companies to curate content, I can tell you that none of the four elements of Fair Use favor AI just using copyrighted work to its digital heart’s content.

Content Sourcing Needs Repair and AI Needs New Thinking

That’s why we call AI training without consent or compensation “AI Theft”. The work of creative people who are still alive, kicking, and well within copyright needs to either be excluded from training data (if the creator prefers) or its use should include subscription compensation for its ongoing use and value.

You might think this will make OpenAI’s Sam Altman upset because he’s already told us that their LLM is getting to ‘the end of content’ online. Since he’s implying that ChatGPT has already swallowed the whole of the Internet, they might need to ‘make synthetic data’ to just keep feeding their omnivorous model, even if it’s inauthentic, AI-created data. One does wonder if they have ever considered what happens in other fields when we get to the Ouroboros stage of consumption. Newsflash: Your output may be far less than ideal.

Credtent’s plans include helping LLMs realize that they are better off training on licensed, credible content before they shift to other kinds of AI training. Despite the impressive work that ChatGPT and other tools have produced with models based solely on statistical correlation learning, neuroscientist Karl Friston has suggested that AI tools will need to shift to other types of learning. He’s not the only one suggesting that LLMs can do a lot but not get us to the next level. Technologist Nova Spivack has also suggested there’s a need for Cognitive AI before getting to the Holy Grail of AI: AGI (Artificial Generalized Intelligence), where the robots are smarter than people and can generally do things they were never trained to do.

Whatever next step is involved, is it wise to consume all that Mystery Meat Content just for the sake of more training material — even if it lacks relevance, legitimacy, and credibility? Plenty of people are saying ‘no.’ We’ve been seeing more companies making their own BabyLMs (or hiring AI companies to do so) in an effort to get better performance overall by training on less content with more specific goals.

AI training will continue. But we can be conscious about what we’re doing so we can respect the work of creators. Credtent makes that possible.

Content Sourcing

The process for ethically sourcing your content is not easy, but it’s also not impossible. You simply review the content you plan to train on beforehand, ensuring that you have licensed the right to use the content or that the material in question is in the public domain. Credtent empowers AI tools with the ability to do that through our platform. Credtent’s neutral, third-party utility is amassing the wishes of thousands of content creators and is opening up that information for LLMs and other AI tools to ethically source content.

This is why Credtent created Content Sourcing badges we provide to AI tools that license Credtent’s database of creators with their available subscriptions and requests for proscription.

If your AI tool trains solely on the licensed content and public domain work, your company can potentially qualify for Credtent’s Ethically Sourced Content badge.

There’s more to it, but if you want to learn more, email us. We’re already working with some AI tools built by companies that realize that their customers want this kind of protection in place if they are going to feel safe from lawsuits related to use of stolen work by using their tools.

Don’t Train On Me

There’s also one more state. The content you’re planning to train on might just be owned by someone who does not want their work used for AI training. We need to respect the wishes of content creators while their work remains in copyright. It’s the work of their lives, and they deserve control of how it is used. Credtent enables creators to declare their work “Do Not Train” through our platform and we opt them out of all LLMs and other AI tools.

That’s why Credtent is making use of the following riff on the Gadsden Flag, “Don’t Tread On Me.” My son Alaric, an artist who thinks ‘AI is sus’, created this flag and is waving it big time; he does not want his unique art used for training so people can just produce content based on what he worked so hard to bring into the world. Thus, Don’t Train On Me is his rallying cry and the one that we’re hearing from many creatives around the world who are interested in ‘opting-out’ of AI through Credtent’s creator-friendly solution.

Credtent is your Content License Utility for the Age of AI

Credtent is a Public Benefit Corporation devoted to the idea that AI should respect the wishes of content creators while their work remains in copyright. It’s their hard work, the work of their lives, and they deserve ongoing compensation if it is being used. That’s why Credtent’s model for payment is a subscription. Credtent orchestrates the licensing and payment to creators who are willing to allow their content to be used for training, while also informing LLMs and other AI tools about which content needs to be excluded from training. You can sign up now to join our Waiting List and lock in our 2024 price for the life of your time with Credtent.

Much as the iPod and the $.99 song mostly destroyed people stealing music on Napster, Limewire and other mp3 tools, we believe that Credtent’s frictionless system will enable creators to get paid real money for use of their work. This will also encourage AI tools to rely on credible, licensed content that protects them and their customers from future lawsuits and the dangers of sharing discredited content.

At Credtent, we’re on a mission to bring creativity and AI together in an ethical way. Join us!

Join the Movement — Sign Up Now at Credtent.org

As you can see from above, this article was written without any help from AI tools (other than the AI example images). It’s all human-created and, occasionally, a bit gonzo. Feel free to use the Credtent Content Origin logos you can find at credtent.org/blog.